NomicMU is a powerful system for simulating virtual worlds. In fact, it is much more complete than FLP's simplistic fluent model, since among other thinngs NomicMU integrates the event calculus, an EC planner, and a first rate NLU/NLG+dialog system. FLP has a PDDL planner, but the many limtations of PDDL include the perfect information assumption, not failing gracefully, and the inability to create new objects or modify the goal stack at run time, and many more besides. What is needed rather is a hybrid pathfinding/planner system which is capable of doing all this. Fortunately, after listening to dmiles explain NomicMU, I realized that it could do this. It also generates a goal stack which itself is a behavior tree which can admit variables.

So NomicMU's interface is already quite similar to what FLP's will be. I have begun converting some existing behavior trees into the aXiom format found in the adv_axiom.pl file.

So NomicMU is supposed to say:

aindilis wants to> be in the restroom. aindilis grabs his cellphone and keys. aindilis walks to the door. aindilis tries to open the door. aindilis notices the door won't open. aindilis tries to unlock the door. aindilis notices the door is open. aindilis walks through the door. aindilis shuts the door behind him. aindilis locks the door. aindilis moves the cat barrier into position to keep the cat out. aindilis tries to open the restroom door. aindilis notices the restroom door is open. aindilis walks through the restroom door.

So taking this use case as an example, there are a few things to keep in mind here.

First thing, is aindilis could have spoken to Alexa, to say "Alexa, tell David I want to be in the restroom" (David being the easily recognizable working name of the FLP project Alexa skill). Or eventually say, "Hey Google, ...". Or tap the media button on the BT headset. Or aindilis could have texted sent a Jabber message to FLP from his phone, entered a text field value or selected a drop-down value in the mobile FLP web-app client and submitted, or chatted from one of many interfaces on his computer, such as IRC, command line, Emacs FLP interface, etc,etc.

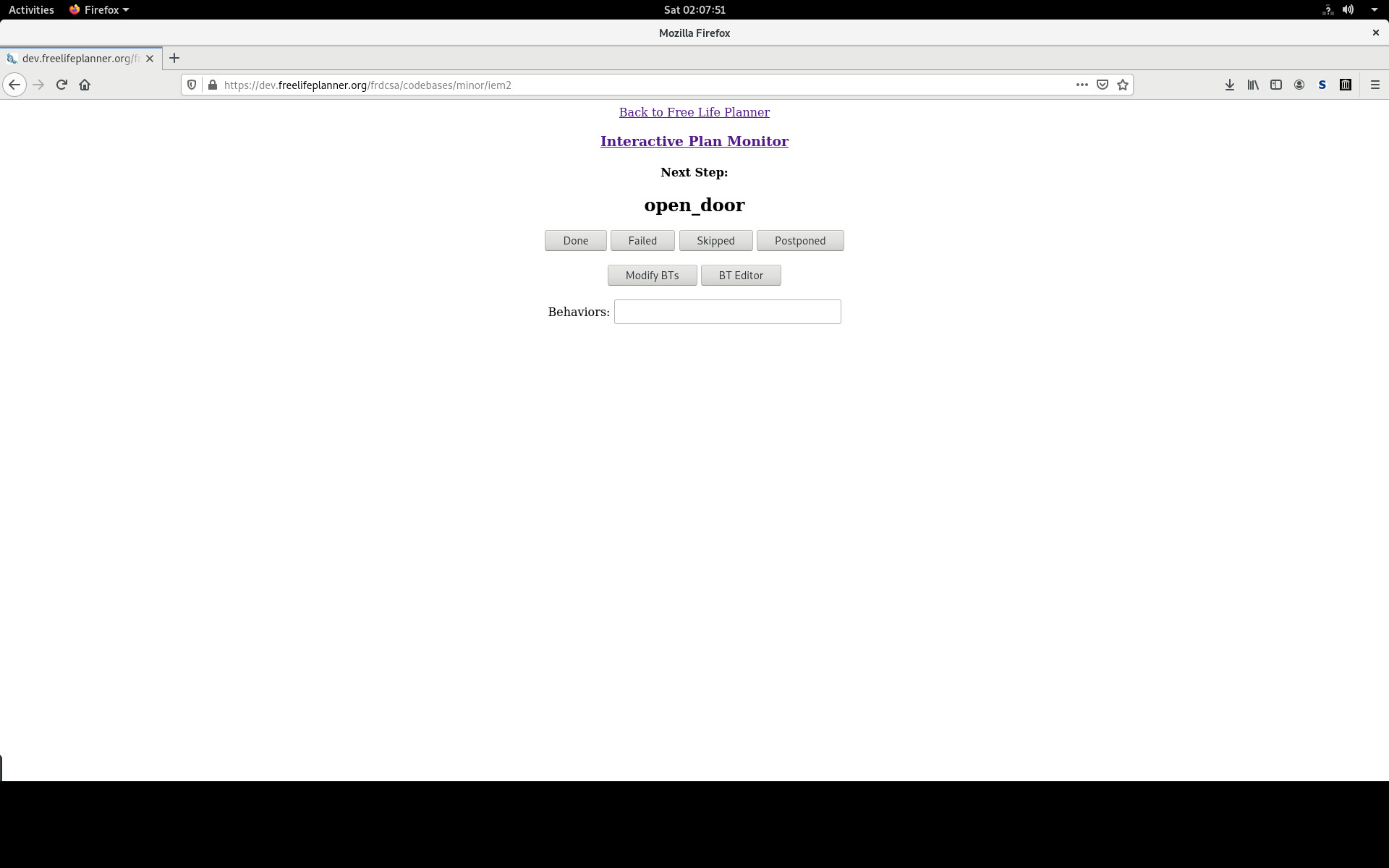

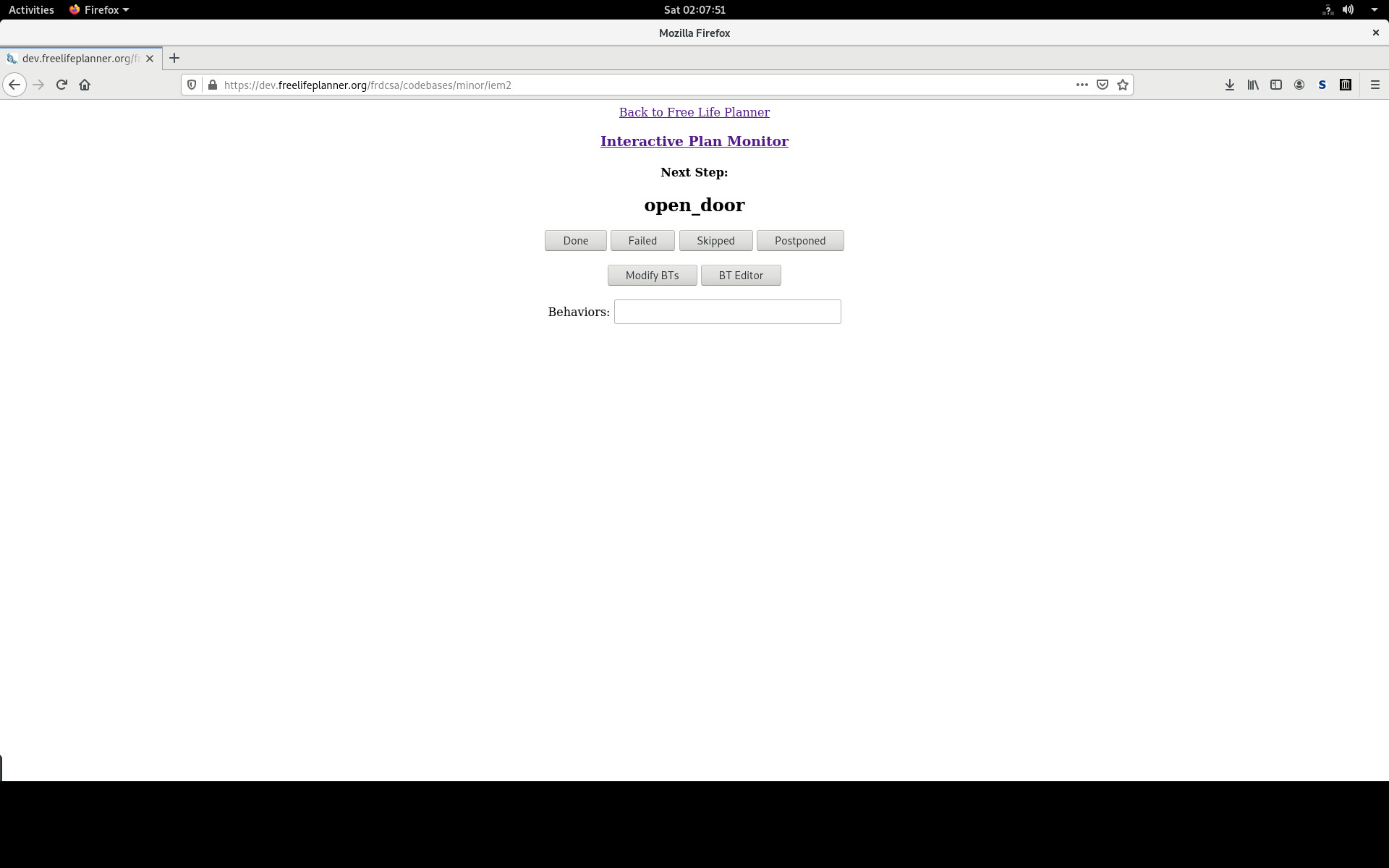

Then the IEM on the phone (perhaps activated via a websocket, and maybe wrapped as an Apache Cordova or some other Android web-app to Mobile APP wrapper system, or simply browsed to by aindilis) kicks in. Here is the trace of IEM on the phone.

- Submitted from any mode to system: aindilis wants to> be in the restroom. - Message sent to IEM to "aindilis should grab his cellphone and keys". - IEM reads over loud speakers and bluetooth headset, sends Jabber message to phone. - The FLP web app is opened, either automatically if possible or otherwise opened by aindilis. - aindilis confirms the following prompt in the FLP web-app: aindilis grabs his cellphone and keys. - The IEM system updates the world state, and proceeds with the "real-time simulation": - Message sent to IEM to "aindilis should walk to the door". - aindilis confirms the following prompt in the FLP web-app: aindilis walks to the door. - The IEM system updates the world state, and proceeds with the "real-time simulation": - Message sent to IEM to "aindilis should open the door". - aindilis *DISCONFIRMS* the following prompt in the FLP web-app: aindilis opens the door. - The IEM system updates the world state, and proceeds with the "real-time simulation", this time branching to a fall-back node in the behavior tree - or however is done from EC: - Message sent to IEM to "aindilis should unlock the door". - aindilis confirms the following prompt in the FLP web-app: aindilis unlocks the door.

So that is the basic gist.

This is basically the complete process, EXCEPT for real-time exogenous events such as scheduled activities (which should definitely include broadcasted warnings ahead of time), timers, timeouts, and so on!

- Message sent to IEM to "EXOGENOUS EVENT: aindilis should take the pizza out of the oven" - aindilis confirms the that this is the new goal, and the old goal to go to the restroom is pushed back on the goal stack. - Message sent to IEM to "aindilis should walk to the kitchen". ... - Message sent to IEM to "aindilis should wash his hands".

At any point in time aindilis can modify the goal stack with verbal or otherwise commands. Suppose he remembers something needs doing. He interrupts the system to achieve that.

Here are some sample idealized behavior trees:

aXiom(leave_house(Person,House)) --> turn_down_heat(Person,House). aXiom(start_drive_car(Person,Car)) --> check_tire_pressure(Person,Car), get_in_car(Person,Car), ensure_have_enough_fuel_remaining(Person,Car), put_on_seat_belt(Person,Car). aXiom(buy_groceries(Person)) --> ensure_you_are_replete(Person), resides_at(Person,House), located_at(KitchenTable,House), isa(KitchenTable,kitchenTable), ensure_kitchen_table_is_cleared(Person,Kitchentable), ( buy_groceries_at(Person,nearestFn(aldi)) ; buy_groceries_at(Person,nearestFn(walmart)) ). aXiom(buy_groceries_at(Person,AldiStore) --> storeIsPartOfChain(AldiStore,aldi), ensure(possesses(Person,Quarter)), isa(Quarter,quarter), ensure(possesses(Person,Bags)) isa(Bags,shoppingBags), go_to(Person,AldiStore).

You get the picture.

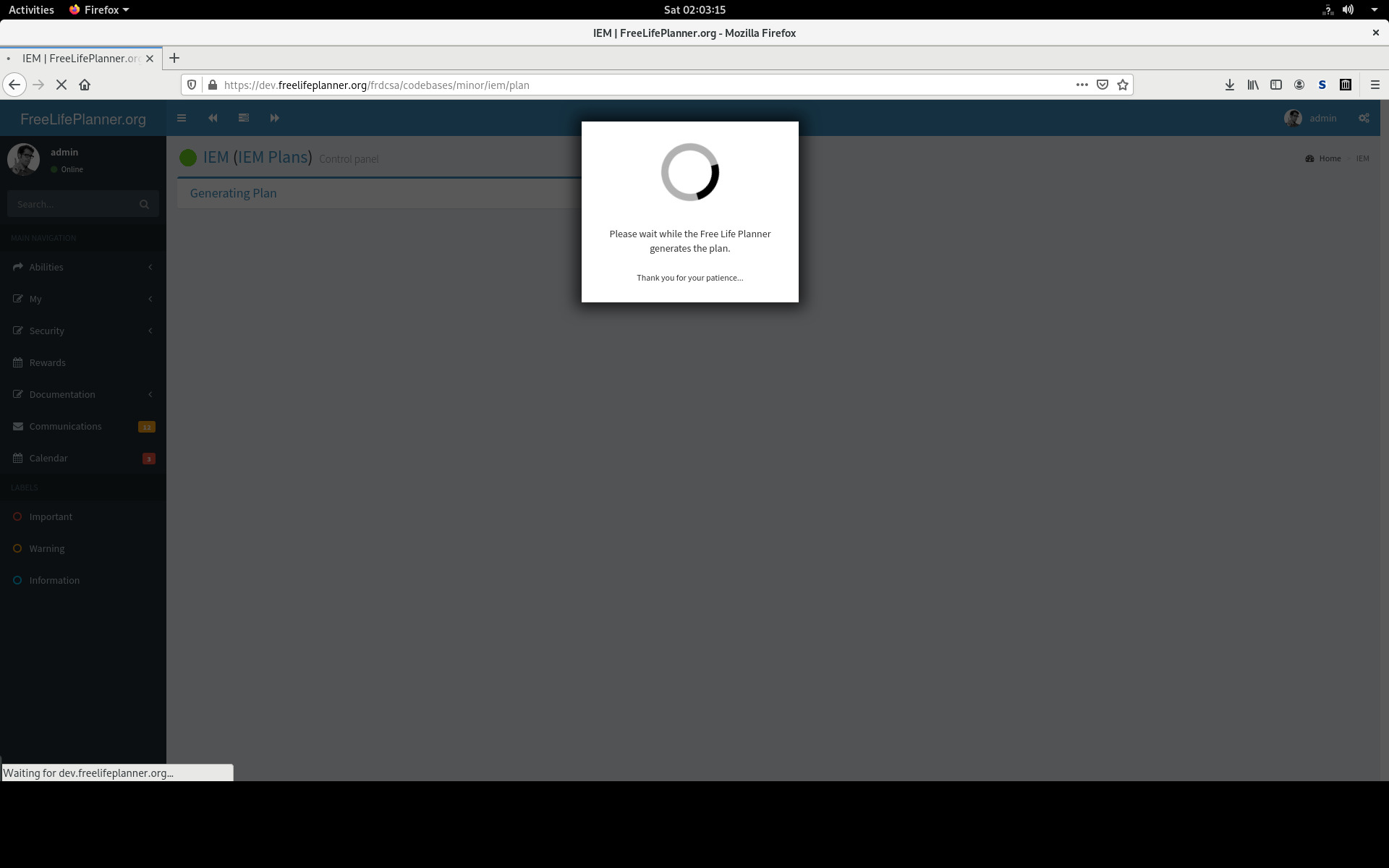

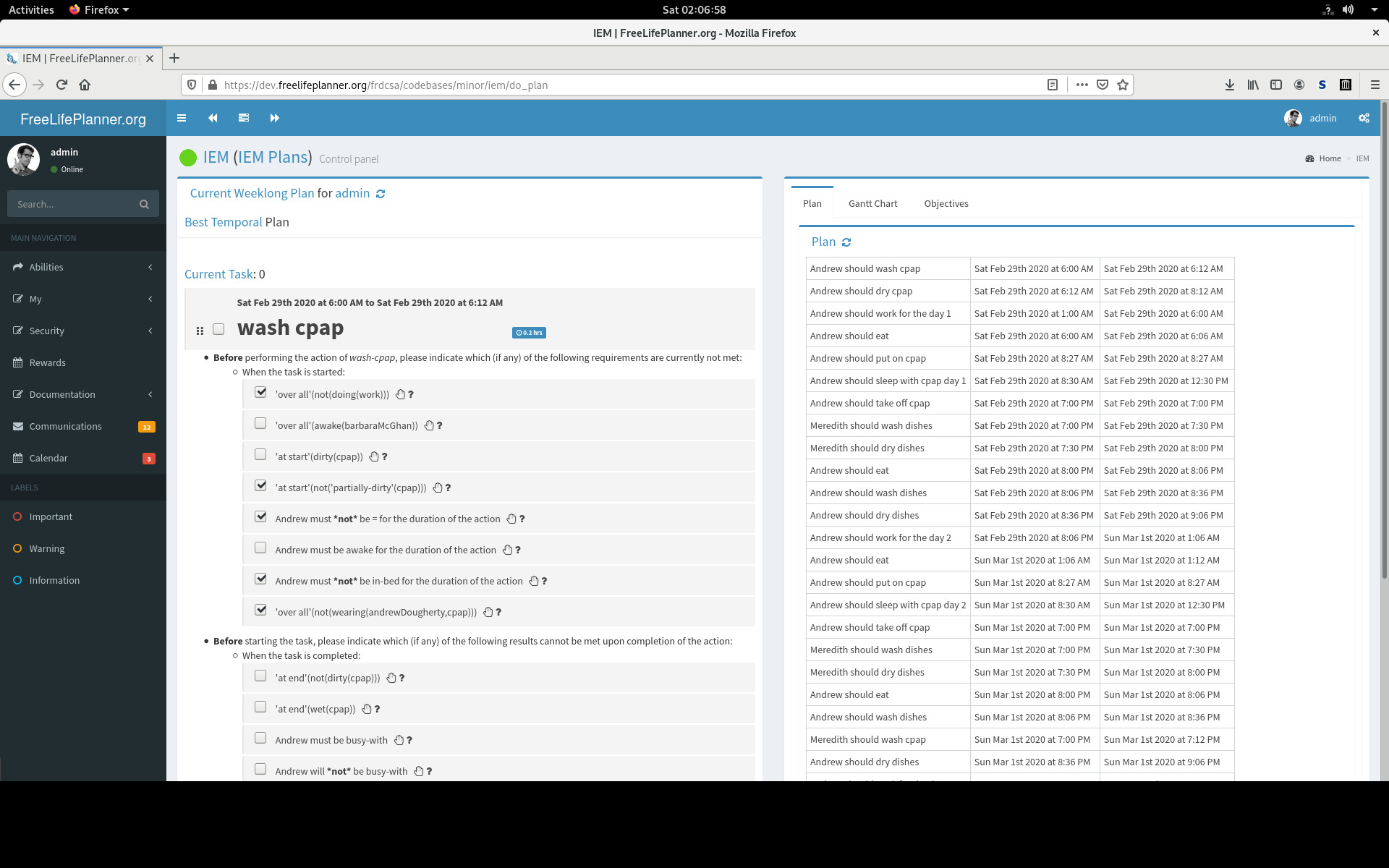

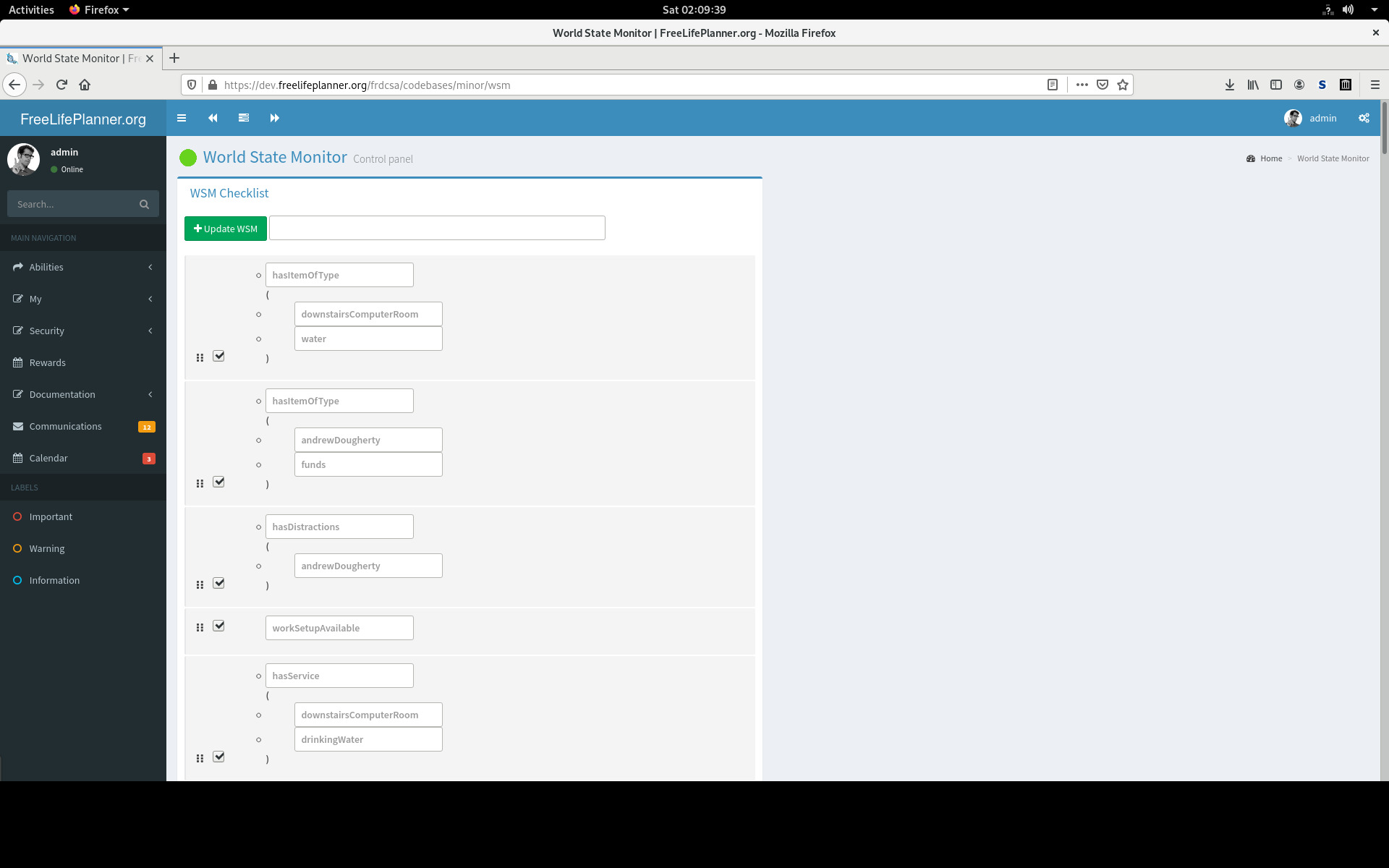

Here is a screenshot of the IEM:

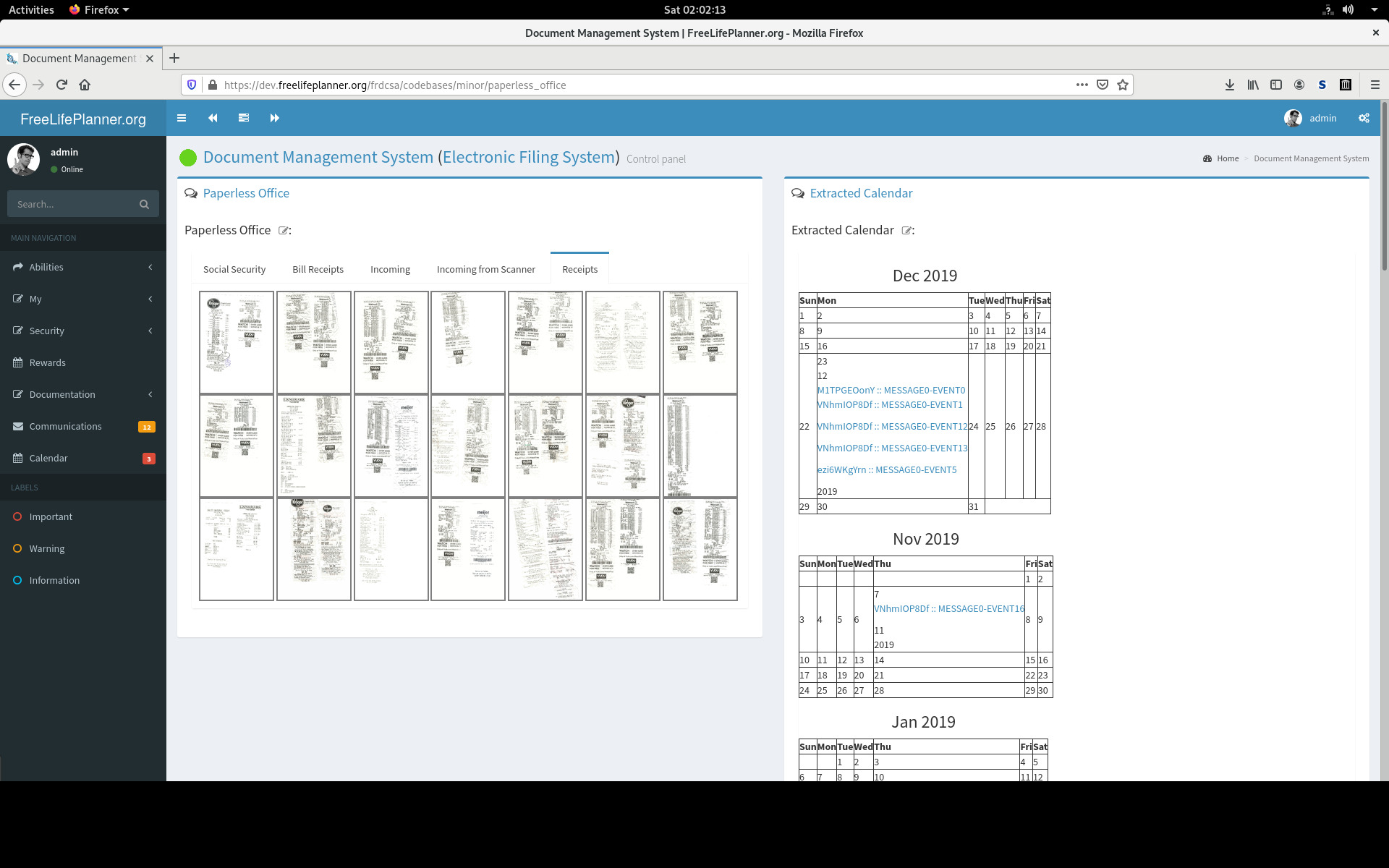

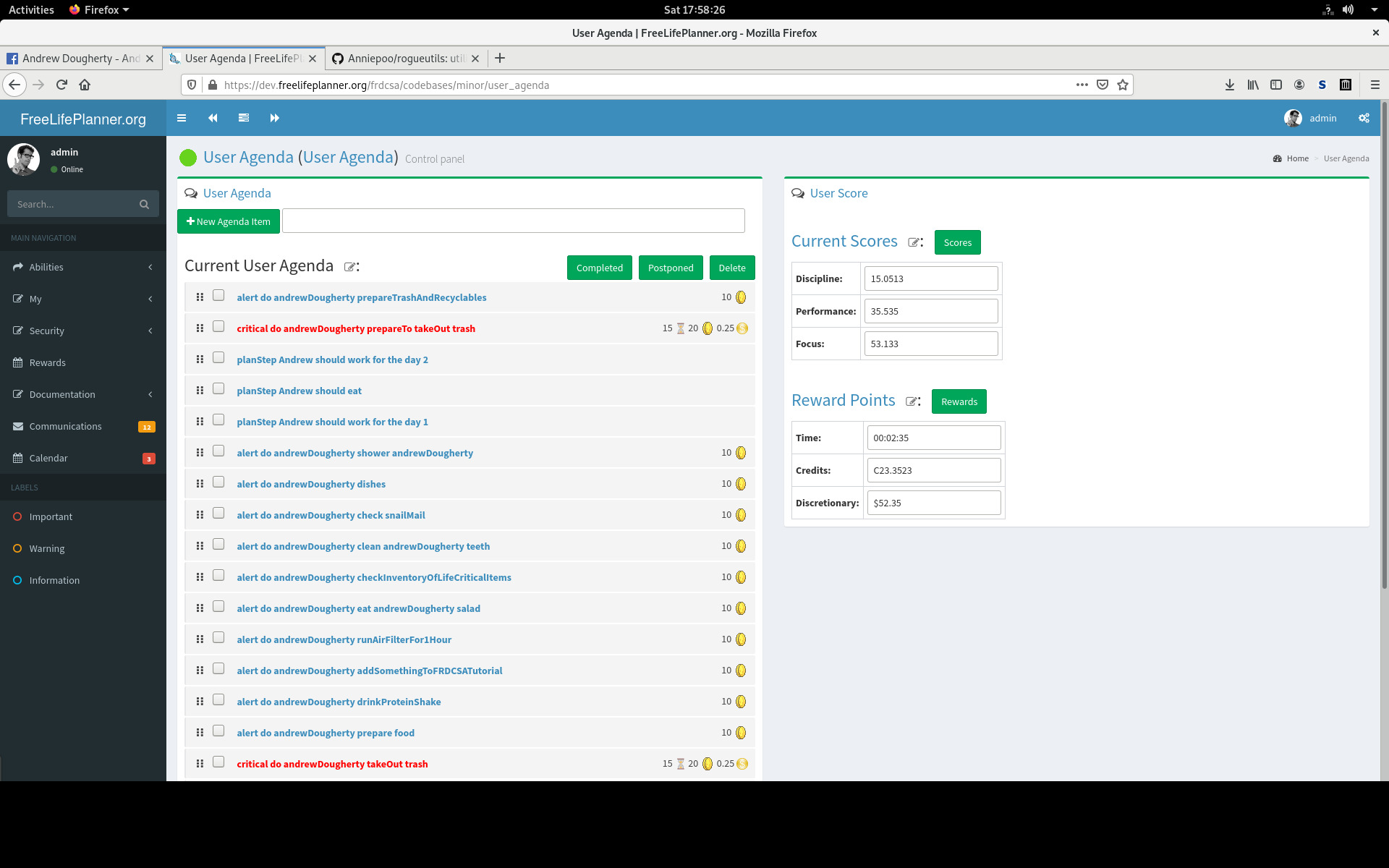

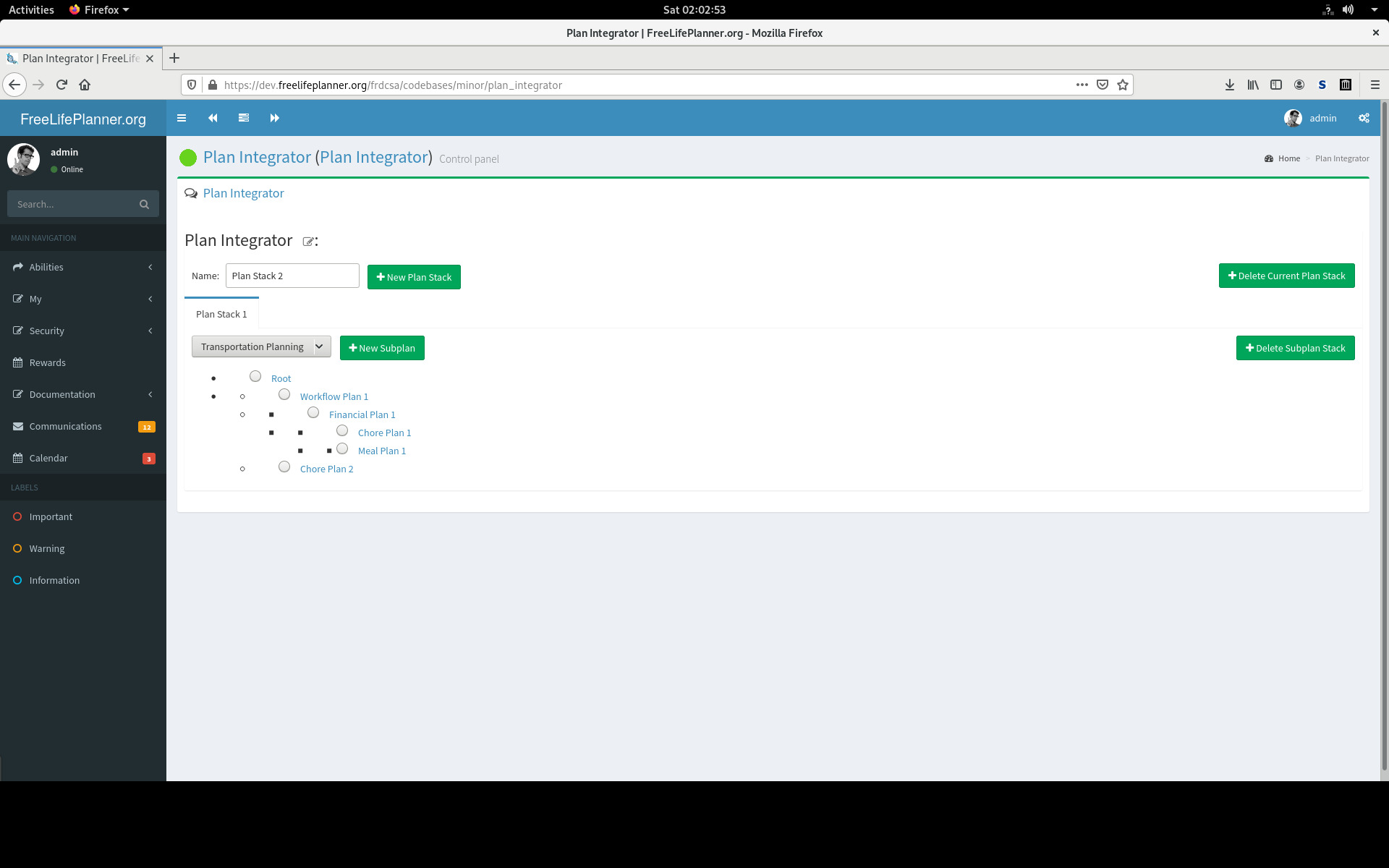

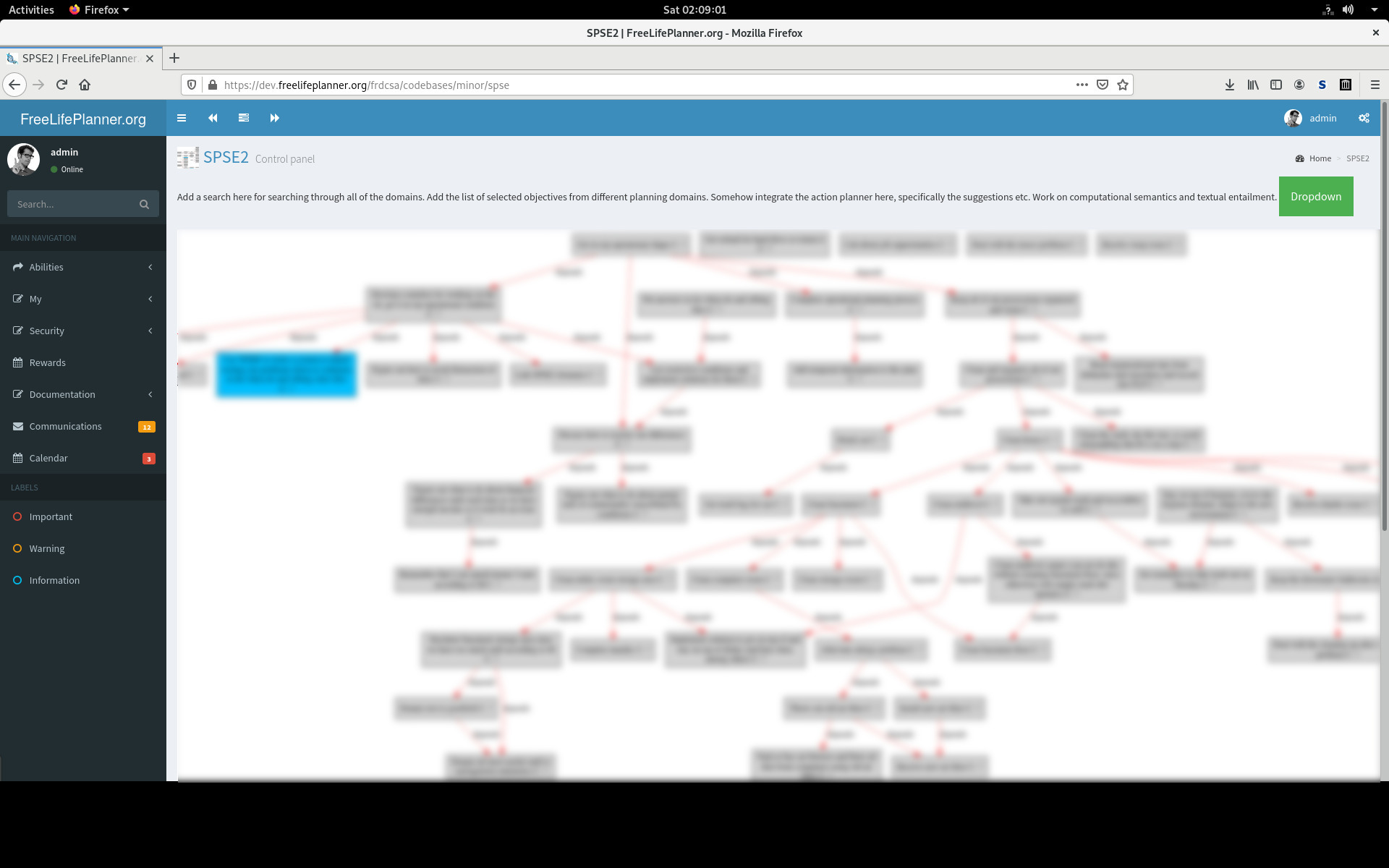

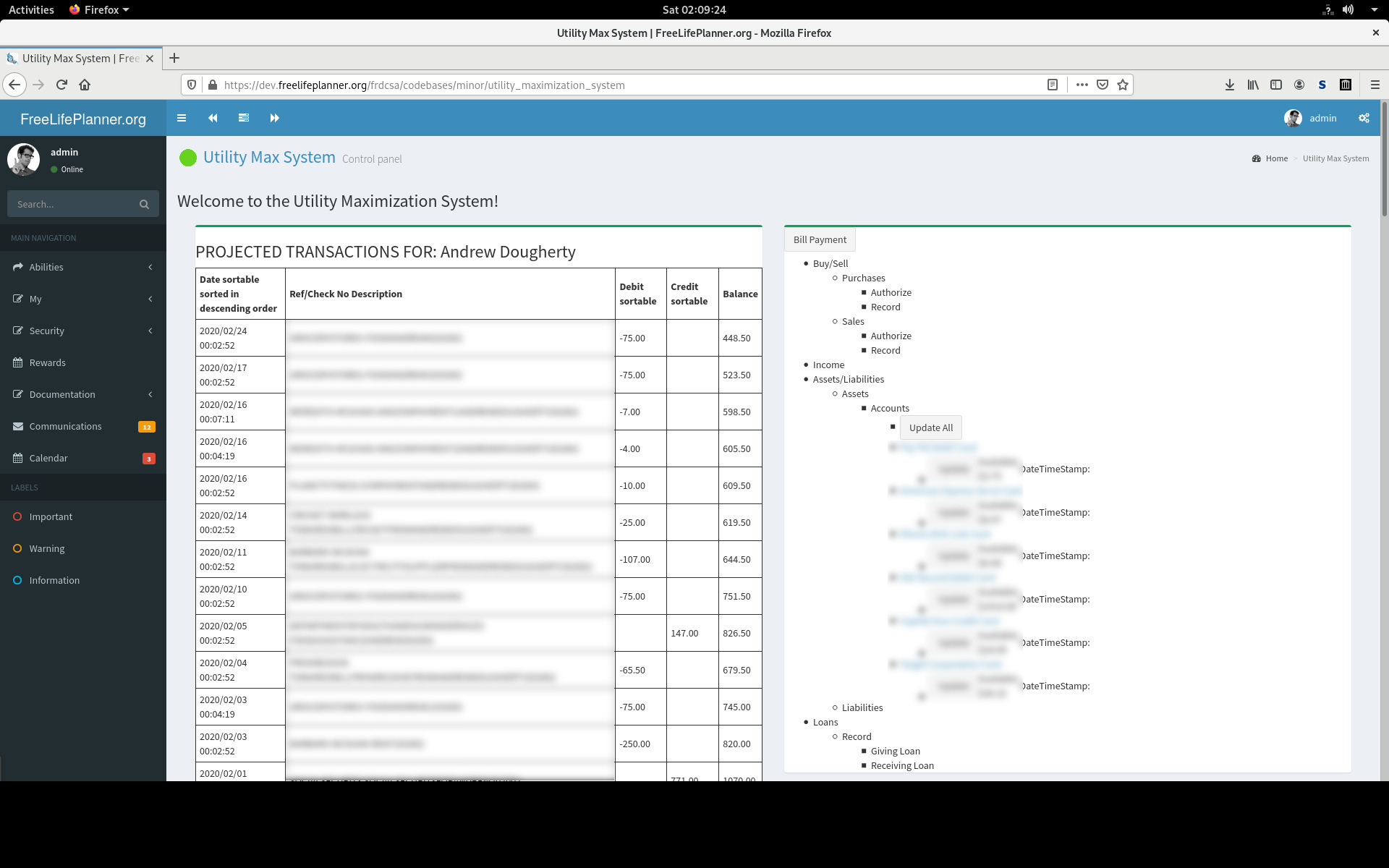

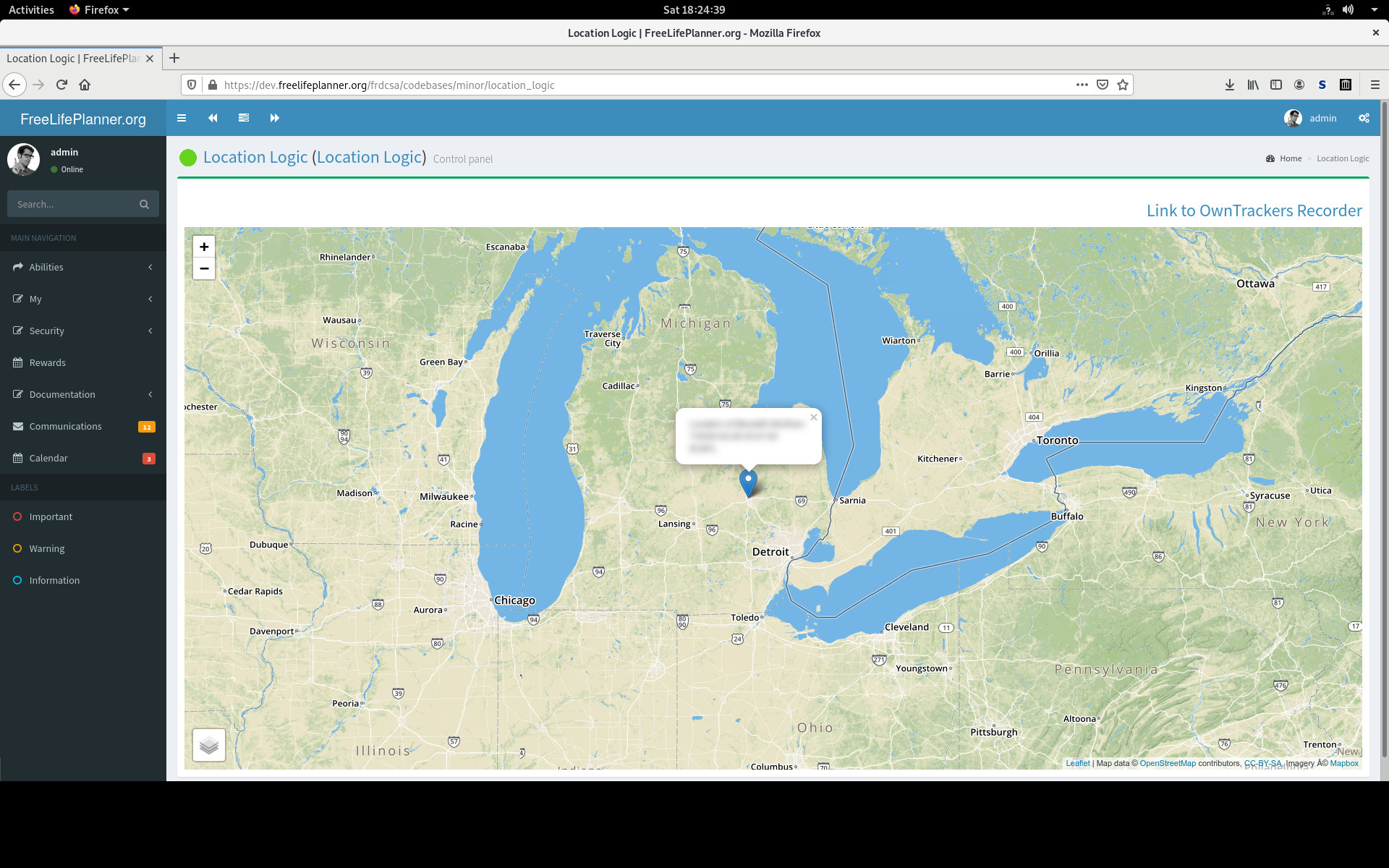

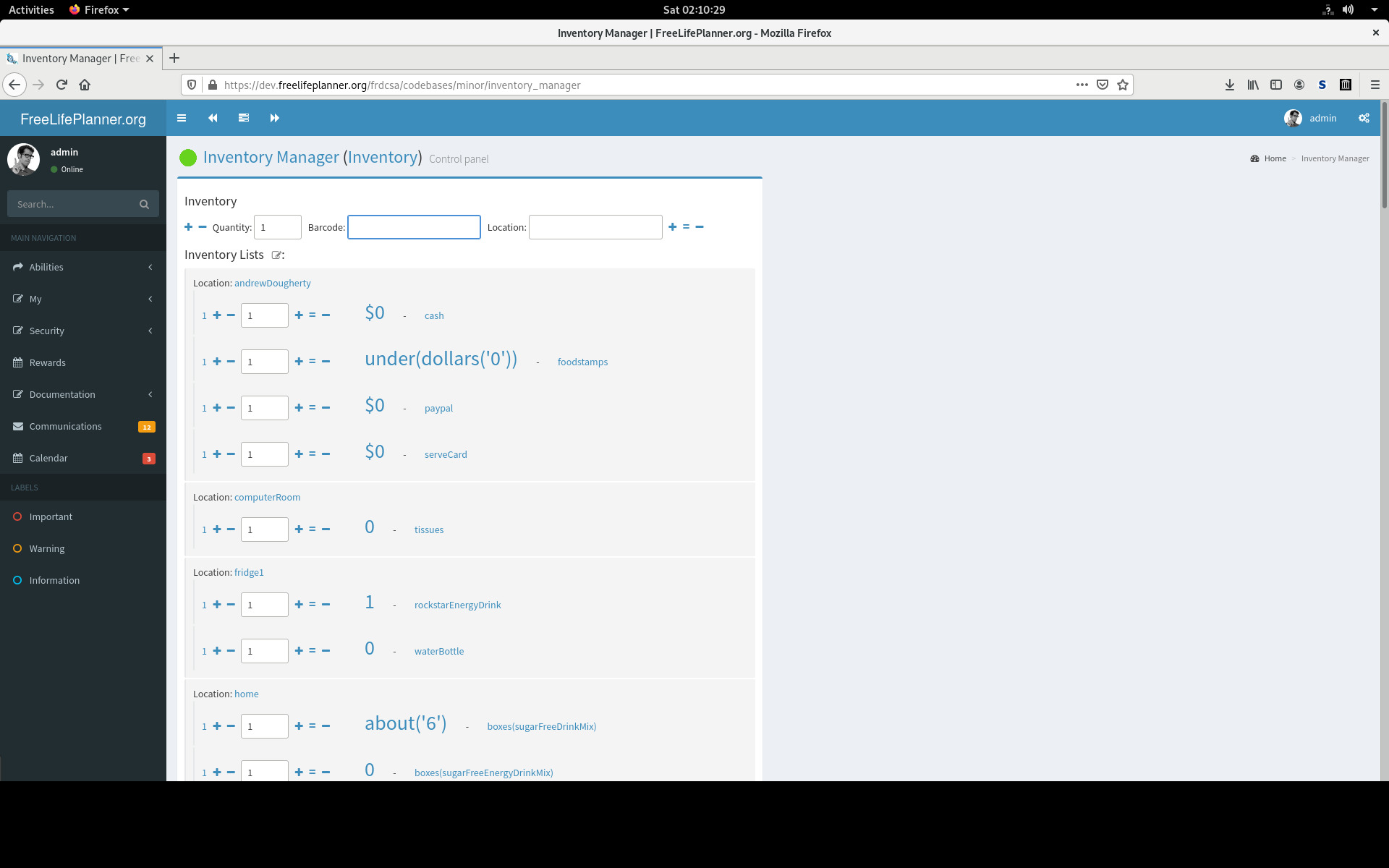

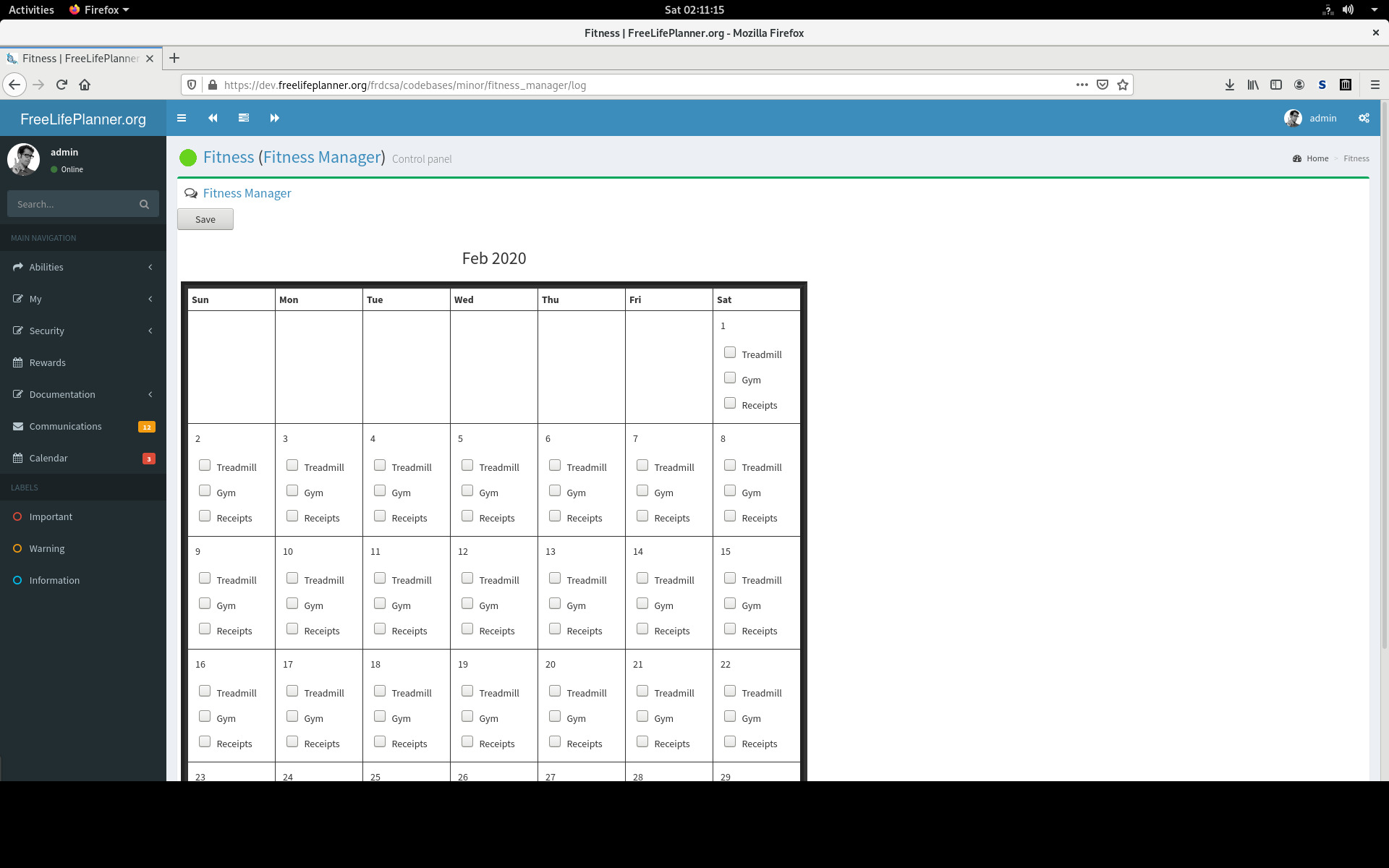

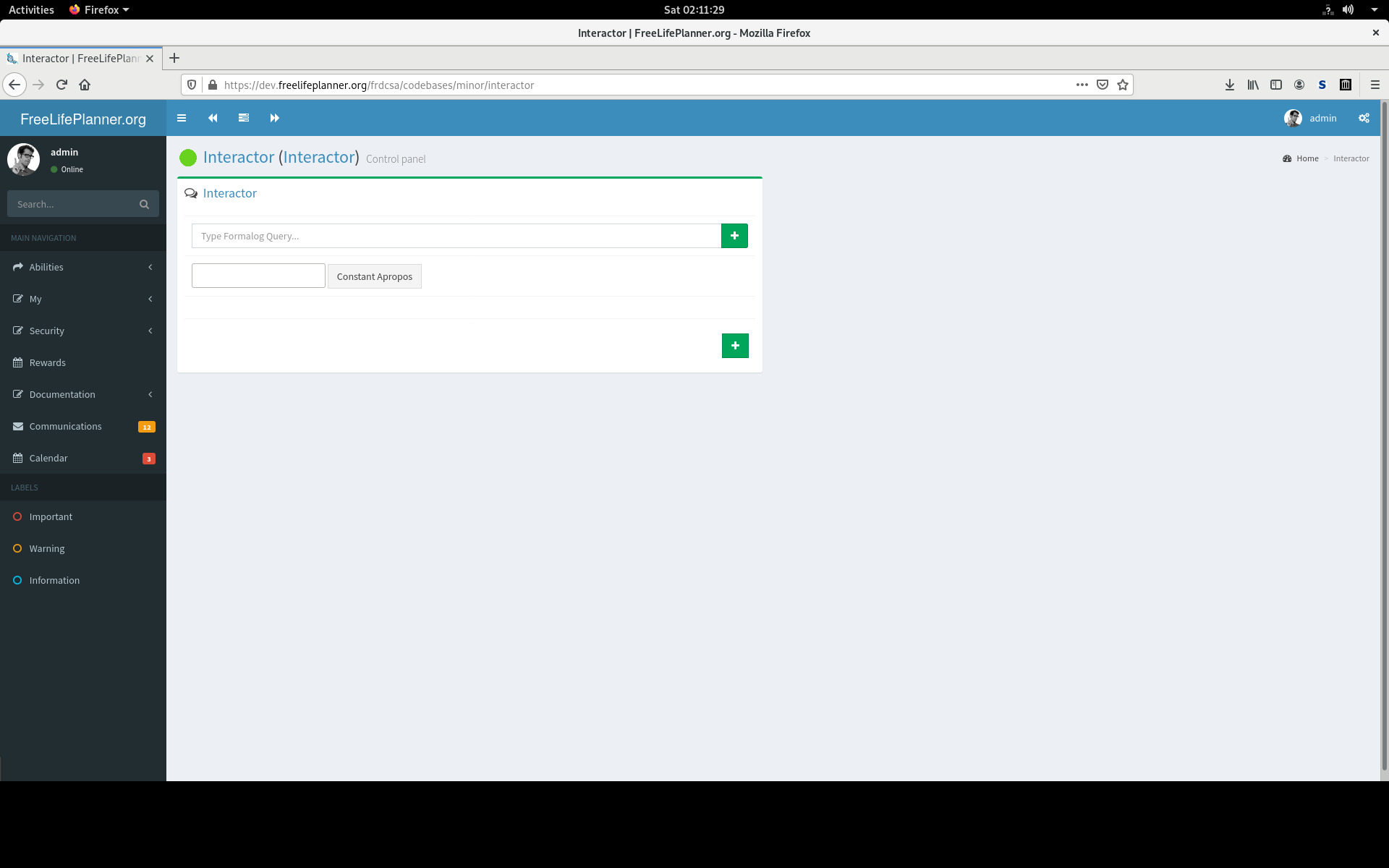

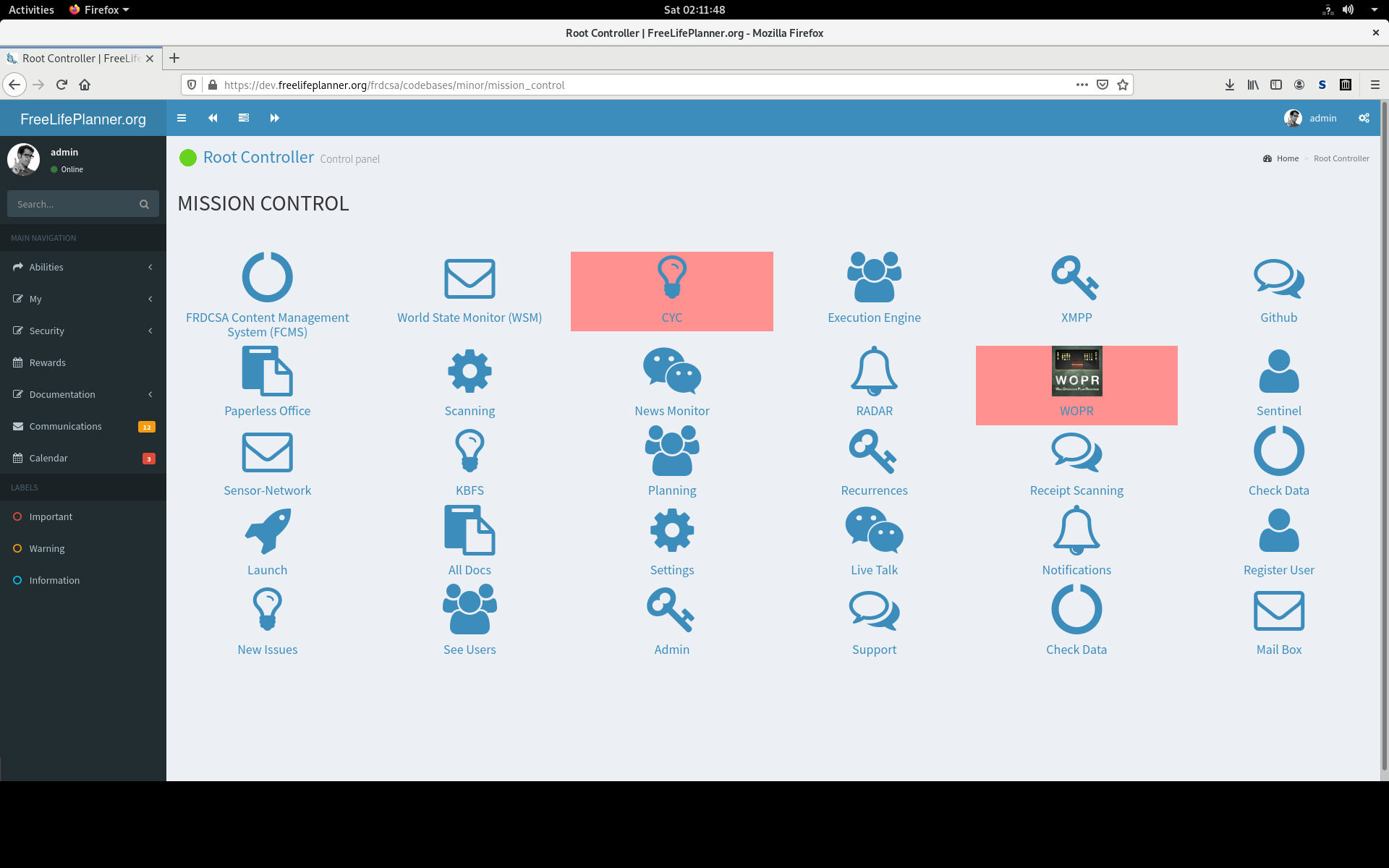

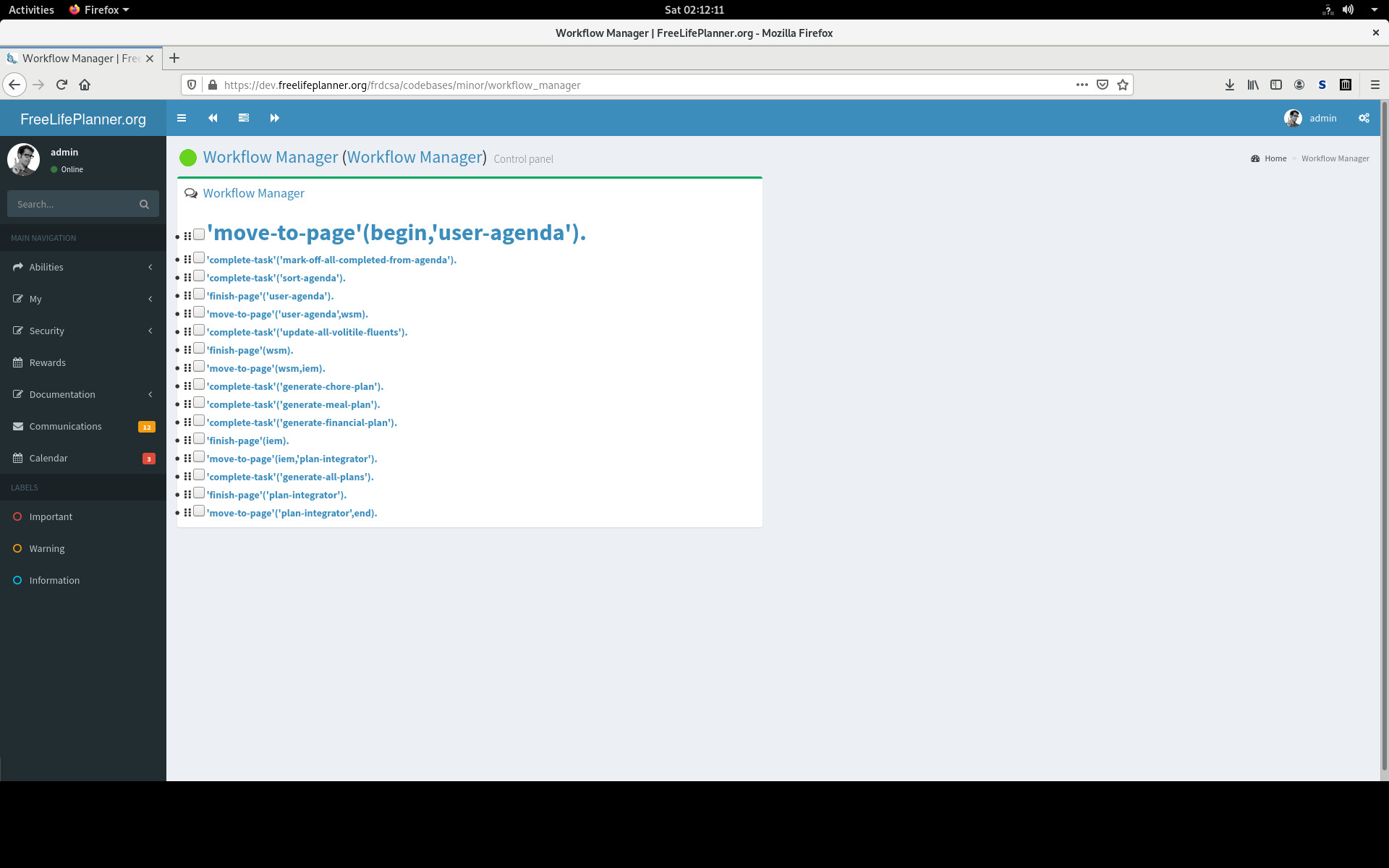

And here are more screenshots of FLP: